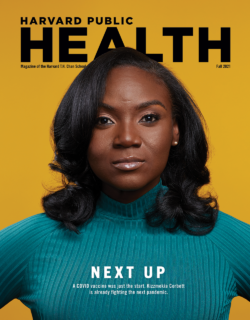

Feature

Artificial Intelligence’s Promise and Peril

John Quackenbush was frustrated with Google. It was January 2020, and a team led by researchers from Google Health had just published a study in Nature about an artificial intelligence (AI) system they had developed to analyze mammograms for signs of breast cancer. The system didn’t just work, according to the study, it worked exceptionally well. When the team fed it two large sets of images to analyze—one from the UK and one from the U.S.—it reduced false positives by 1.2 and 5.7 percent and false negatives by 2.7 and 9.4 percent compared with the original determinations made by medical professionals. In a separate test that pitted the AI system against six board-certified radiologists in analyzing nearly 500 mammograms, the algorithm outperformed each of the specialists. The authors concluded that the system was “capable of surpassing human experts in breast cancer prediction” and ready for clinical trials.

An avalanche of buzzy headlines soon followed. “Google AI system can beat doctors at detecting breast cancer,” a CNN story declared. “A.I. Is Learning to Read Mammograms,” the New York Times noted. While the findings were indeed impressive, they didn’t shock Quackenbush, Henry Pickering Walcott Professor of Computational Biology and Bioinformatics and chair of the Department of Biostatistics. He does not doubt the transformative potential of machine learning and deep learning—subsets of AI focused on pattern recognition and prediction-making—particularly when it comes to analyzing medical images for abnormalities. “Identifying tumors is not a statistical question,” he says, “it is a machine-learning question.”

But what bothered Quackenbush was the assertion that the system was ready for clinical trials despite the fact that nobody had independently validated the study results in the weeks after publication. That was in part because it was exceedingly difficult to do. The article in Nature lacked details on the algorithm code that Quackenbush and others considered important to reproducing the system and testing it. Moreover, some of the data used in the study was licensed from a U.S. hospital system and could not be shared with outsiders.

Over the course of his career, Quackenbush has been a vocal advocate for transparency and data sharing, so much so that President Barack Obama named him a White House Open Science Champion of Change in 2013 for his efforts to ensure that vast amounts of genomic data are accessible to researchers around the world. Reproducibility is the essence of the scientific method, Quackenbush says, and it is of the utmost importance when new technologies are being floated for use in human clinical trials. As a cautionary tale, Quackenbush mentions Anil Potti, a former Duke University professor who in the early 2000s claimed to have discovered genetic signatures that could determine how individuals with certain cancers would respond to chemotherapy. The technique sailed into human clinical trials even though other researchers reported that they were unable to reproduce Potti’s findings. Eventually it was revealed that Potti had falsified data and study results, and the whole house of biomedical cards came crashing down. Patients were harmed, lawsuits were filed, studies were retracted, and Quackenbush was asked to join a National Academies of Sciences, Engineering, and Medicine panel to investigate what went wrong. The panel, Quackenbush notes, concluded that the whole thing could have been avoided if other researchers had access to Potti’s data, software, and methodological details.

It’s not uncommon for AI systems to work well in research settings and then flop in real-world settings.

To be clear, Quackenbush didn’t suspect subterfuge or anything nefarious about the Google Health study. Still, brash headlines about algorithms outperforming medical professionals made him bristle, and he was deeply worried about a system being used in treating people who might have cancer before the findings were reproduced. After all, it’s not uncommon for AI systems to work well in research settings and then flop in real-world settings.

To bring attention to the issue, Quackenbush and more than two dozen colleagues from various academic institutions wrote an article in Nature last October expressing their concerns with the Google Health study and calling for greater transparency among scientists working at the intersection of AI and cancer care. The intent was not to excoriate the researchers but to illuminate the significant challenges emerging in AI and health, challenges that are only going to grow in scope and scale as researchers continue probing the possibilities of this fast-evolving technology.

To their credit, the Google Health team replied to Quackenbush and colleagues, again in Nature, explaining that they had since added an addendum to the original study to provide more methodological details about how the algorithm was built. But they also underscored the very real challenges of working with licensed health data and proprietary technology, as well as the murky regulatory waters surrounding AI.

In many ways, Quackenbush’s concerns and exchanges with Google are a microcosm of the red-hot field of AI and health, where relatively young tech firms with bulging market caps, exquisitely talented engineers, and unprecedented computing power are planting their flag alongside more traditional players like life science companies, hospital systems, and academic institutions. The potential is extraordinary but so is the hype, and the research landscape is littered with potential pitfalls. It’s possible that AI will speed up drug discovery, streamline clinical practice, and improve diagnostics and care for millions of people on every rung of the global socioeconomic ladder. It’s also possible that bad data and biased algorithms will harm patients and that overinflated expectations of AI as a cure-all could in the long run undermine trust in science and desiccate investment in the field.

“These new technologies are rapidly emerging, and they’re raising really serious questions that we need to answer now,” Quackenbush says. “And in the long term, transparency among researchers will speed adoption of AI and help ensure we provide quality, unbiased care to patients.”

Lingo

What’s in a name?

- Artificial Intelligence: an umbrella term for computer systems that display properties of intelligence.

- Machine Learning: a subset of artificial intelligence largely focused on developing algorithms that use statistical techniques to learn from data sets, identify patterns, and make predictions.

- Deep Learning: a subset of machine learning in which “artificial neural networks” are designed to mimic the human brain so they can learn on their own and gradually improve their predictive powers.

- Natural Language Processing: a field that draws on AI and linguistics to create computer programs that understand human language as it is spoken and written.

Booms and Busts

The ways in which AI and its various subdisciplines are being deployed in public health are as fascinating as they are diverse. Researchers have produced AI-based methods to predict the source of foodborne illness, to rapidly scour millions of tweets for people expressing symptoms of influenza, and to analyze hospital records to identify allergic drug reactions. At the Harvard Chan School, S.V. Subramanian, professor of population health and geography, recently worked with researchers from Microsoft and the Indian government to predict and map the burden of childhood undernutrition across all the nearly 600,000 villages in rural India. Meanwhile, Clarence James Gamble Professor of Biostatistics, Population, and Data Science Francesca Dominici and colleagues are using AI to identify causal relationships between environmental risks, such as air pollution, and poor health outcomes.

Health care was not a focus of early AI research. The concept of a machine that can think, reason, and learn like a human has fascinated mankind for generations. It was largely the stuff of sci-fi up until 1950, when Alan Turing, a British mathematician and World War II code breaker, published a seminal paper, “Computing Machinery and Intelligence.” In the years that followed, researchers across disciplines pursued AI with gusto. In 1956, a team at RAND unveiled “Logic Theorist,” which is widely considered the first AI program and could solve mathematical theorems by mimicking human problem-solving skills. In 1964, a team from MIT released ELIZA, a program that simulated human conversation and is similar to the customer-service chatbots that are common today.

As more proofs of concept came to fruition, ambitions grew wildly, and some researchers made bold proclamations that AI would soon surpass human intelligence. Much of the pressure to innovate rapidly stemmed from the U.S. military in the late ’60s and early ’70s, when it was deep in the Cold War and keen on gaining a competitive advantage in computing, especially in natural language processing, a subdiscipline of AI that researchers hoped would allow military aircraft to be guided by voice commands and be able to translate Russian text and speech to English. But many researchers had oversold the potential of the technology and progress plateaued. After a string of high-dollar failures, the Department of Defense and other key funders pulled out of the field and the research community collapsed.

“It’s what people call an ‘AI winter,’” explains JP Onnela, associate professor of biostatistics. “We have seen at least two AI winters, the second of which took place in the late ’80s and early ’90s. These winters were essentially periods when expectations for what AI could accomplish were set very high and then when those expectations weren’t met, the field fell out of fashion and funding was cut back.”

Fast-forward to today, and AI is once again booming. A recent survey from the research firm RELX polled 1,000 executives across industries and found that 93 percent believe that AI—including machine learning and deep learning—is allowing their companies to better compete, while more than half reported adding positions to their payrolls focused on AI and other emerging technologies.

The ways in which AI and its various subdisciplines are being deployed in public health are as fascinating as they are diverse.

From controlling self-driving cars to executing high-speed stock trades to optimizing the performance of professional soccer teams, it seems like there’s not a corner of the world untouched by an algorithm.

Unlike in previous boom times for AI, however, health care is of the utmost interest to researchers this time around. One reason is because enormous amounts of health data can be extracted from electronic medical records, digital images, biobanks, and mobile phones and wearable devices. For Onnela, though, all the interest and all the buzz about big data and AI can engender trepidation about the future of the field.

“I think there’s a lot of hype in AI in general. And I think there’s especially a lot of hype when you couple AI with health, and it does concern me,” he says. “But at the same time, we have seen some very impressive gains, especially with deep learning. And one of the reasons we’ve seen these gains is because there is a ton of data that can be used to train these complex models. Does that mean we are making advances in AI in the sense of teaching machines to reason? No. But we are making advances in teaching these systems to be very good at recognizing patterns in data and that’s a big problem to overcome.”

Enthusiastic as he is, Onnela’s optimism is tempered by an important caveat: Just because there is a lot of data, he says, doesn’t mean it is good data. And the outcomes of training algorithms on bad data can be horrendous.

Algorithmic Bias

In 2019, Ziad Obermeyer of the University of California–Berkeley and Sendhil Mullainathan of the University of Chicago published a study in Science that set off alarm bells. They reported that a widely used algorithm meant to help hospital systems identify patients with complex needs who would benefit from follow-up care had exhibited significant racial bias. The algorithm used health costs as a proxy for health needs—in other words, the algorithm assumed that patients who had higher medical care costs more likely had poorer health and needed more care. The problem is that Black populations in the U.S. face significantly more barriers to care, and less money is spent on Black patients than white patients, so the algorithm, the authors explained, regularly and wrongly concluded that Black patients were healthier than equally sick white patients. In other words, scores of Black patients were probably excluded from robust and possibly lifesaving care because a flawed line of reasoning was written into the computer code. In fact, when the researchers fixed the problem and reanalyzed the data, the percentage of Black patients eligible for extra care jumped from 17.7 percent to 46.5 percent.

It’s a problem known as algorithmic bias, and it isn’t limited to health care, says Heather Mattie, a lecturer on biostatistics and co-director of the Health Data Science Master’s Program. Mattie mentions one instance in which journalists at ProPublica examined an algorithm used in Florida to assess whether incarcerated individuals were likely to commit future crimes. They found that Black defendants were often predicted to be at a higher risk of recidivism than they actually were, while white defendants were often predicted to be less risky than they were.

In another instance, Joy Buolamwini, a Ghanaian American researcher at MIT and founder of The Algorithmic Justice League, reported that facial recognition software had trouble reading faces of people with darker skin. When she tested the technology on herself, she discovered the software worked better when she wore a white face mask than using her actual face.

For now, it’s incumbent on the companies building the algorithms to identify the bias in the data, to understand the bias in the algorithm, and to account for it.

Such bias is pernicious in any instance. For algorithms that help guide health care decisions throughout entire hospital systems, the effects could be deadly. “There are a lot of blind spots when it comes to algorithmic bias,” Mattie says. “Not training these machine-learning models on data that is truly representative of the patient population is a huge issue and one of the biggest drivers of bias. But another driver of bias is the lack of diversity among the teams that are actually creating these systems and algorithms.”

The good news is that many of these algorithmic biases can be fixed and prevented. Deborah DiSanzo, an instructor of health policy and management and president of Best Buy Health, says there are two main ways in which bias is introduced: “You have bias in your data and you have bias in your algorithm.” On the data side, she’s optimistic that the rise of electronic health records will soon allow for far more inclusive and representative data sets to be produced and used to train AI systems. She points to the 2009 passage of the Health Information Technology for Economic and Clinical Health Act as an important catalyst for digitizing data and sharing it. Still, she notes, electronic health data is often “very dirty,” not always accurate, many times incomplete, and occasionally duplicative.

As for writing bias into the algorithm, that mostly boils down to poorly designed questions and logical oversights, which can be due to a lack of domain expertise but also stem from a lack of diversity among the teams writing the code. DiSanzo has had a long career in health care, having held leadership positions with Hewlett-Packard, Philips Healthcare, and IBM, where she was the general manager of Watson Health. She says that the industry as a whole is increasingly aware of algorithmic bias and the importance of good data and diverse teams. It’s not a problem that will be fixed overnight, though, and it’s imperative that developers take the lead on rooting out bias, she says. “Eventually, we’ll have interoperability standards. Eventually, we’ll have really good health information exchanges. Eventually, we’ll get to the point where we have really diverse data across race, gender, and socioeconomic backgrounds to build and train our algorithms with,” she says. “But for now, it’s incumbent on the companies building the algorithms to identify the bias in the data, to understand the bias in the algorithm, and to account for it.”

Policy Slog

There’s another speed bump at the intersection of AI and health that has nothing to do with computational power or biased data sets and everything to do with the often-behemoth bureaucracies tasked with regulating the technology. “If governments are serious about innovation and serious about improving health care, they need to look at not just scientific innovation or technology innovation but also policy innovation to create an enabling ecosystem for AI and machine learning,” says Rifat Atun, professor of global health systems. “If you want to know what’s going to prevent AI from being applied in health care, it’s the paucity of innovation on the policy side.”

From a regulatory perspective, these technologies pose challenges at every turn. Atun says that many existing policies governing health care, such as the Health Insurance Portability and Accountability Act, were crafted long before these algorithms took shape and will need updating as machine learning continues to proliferate. That, of course, is easier said than done. It can take years for national or international policy to crystallize. In some cases, Atun says, the technology is evolving so quickly that it is already antiquated by the time frameworks regulating it are crafted, debated, approved, and implemented.

“AI has the potential to transform health systems. But for this to happen, health systems and the policies that guide them also need to transform.”

Rifat Atun, Professor of Global Health Systems

For the most part, Atun is quite bullish on the prospects of AI in health. He says that we’re living in a technological renaissance that’s inspiring biomedical innovation at a scale we’ve never seen, and he’s confident that issues like algorithmic bias and access to computing power can be solved. One of the greatest upsides to AI in health from his perspective is the benefits it could provide in low-resource countries, where persistent shortages of human and financial means limit the quality and quantity of health care. In Rwanda, for instance, a country of about 13 million people, there were only 11 practicing radiologists in 2015. An algorithm that could scan hundreds of images quickly and prioritize ones to follow up on would dramatically improve workflow.

Applying AI and machine learning in low-resource settings where hospitals might not have steady access to electricity let alone sufficient IT infrastructure might seem like putting the cart before the horse. As a counterpoint, Atun cites the telephone. “For a long time, many low-income countries didn’t have telephone networks. They didn’t have the money or resources to invest in building these land-based networks of cables and poles crisscrossing their country and to pay for fixed-line telephones,” he says. “And now we can see that they didn’t need to follow that trajectory. Many leapfrogged straight to satellite-based communication networks and mobile phones and now the technology is ubiquitous across the developing world.”

While a similar path is possible for AI, Atun says, policymakers, think tanks, and governments in rich nations and poor nations need to do their part. He’d like to see efforts aimed at identifying which public health interventions can most cost-effectively modify risk, disease progression, and health outcomes, and he’d also like changes in policies and practices to accommodate high-volume diagnostics.

“AI has the potential to transform health systems. But for this to happen, health systems and the policies that guide them also need to transform,” he says. “Pushing an emerging technology into a health system that was designed decades back is not going to help.”

Research

Tapping into AI at the Harvard Chan School

Here’s how researchers across departments are pushing forward the field of AI to better understand human health.

- Researchers led by Yonatan Grad of the Department of Immunology and Infectious Diseases analyzed the potential of machine learning to predict whether certain bacteria would be resistant to certain antibiotics. Such tools would save lives and cut down on inappropriate treatments, according to Grad and colleagues, but they caution that there are significant challenges to ensuring such models stay updated with pertinent data.

- The proliferation of electronic health records (EHRs) has created an unprecedented amount of biomedical data. Tianxi Cai, of the Department of Biostatistics, and colleagues are developing machine learning and natural language processing techniques to analyze EHR data, predict health risks, and improve diagnostics.

- Understanding how air pollution and other environmental threats affect populations requires analyzing massive amounts of granular data from numerous sources. Francesca Dominici, of the Department of Biostatistics, and colleagues are developing new AI tools to learn from data and identify causal links between exposure to environmental agents and health outcomes.

- Jeeyun Chung of the Department of Molecular Metabolism is part of a team that’s utilizing advanced imaging techniques to capture exquisitely detailed pictures of single cells and developing machine learning methods to analyze the images and automatically identify the cellular organelles.

- To pinpoint where children are most at risk of undernutrition in India, S.V. Subramanian, of the Department of Social and Behavioral Sciences, and colleagues created a machine learning prediction model to estimate the prevalence of key indicators of undernutrition, including stunting, underweight, and wasting, across India’s 597,121 villages.

- Andrew Beam, assistant professor of epidemiology, employs machine learning to develop decision-making tools that doctors can use to better understand and care for their patients. His lab is particularly focused on neonatal intensive care units, which Beam says underutilize the tremendous amounts of data generated by premature infant patients as part of their clinical care.

The Human Touch

Atun’s comment highlights the inherent tension of artificial intelligence finding its place in health care. It’s the same tension that caused Quackenbush to raise his eyebrows at the Google Health study. Over the last 20 years, the tech industry has been focused on “disrupting” any and all elements of society and business. It’s an industry where software engineers have been encouraged to “move fast and break things,” as Facebook CEO Mark Zuckerberg once put it. Meanwhile, over the last 2,000 or so years, the best health care practitioners have been deliberate and guided by the Hippocratic ideal of doing no harm.

Often lost in the conversation about AI is how deeply personal health is. “If we think about how you end up with a diagnosis of depression or brain cancer, it’s often because a family member, a friend, or a colleague points out that you haven’t been yourself lately or that you look run down,” says Onnela. His own research on digital phenotyping is focused on building methods to analyze data from personal digital devices—step counts, sleep cycles, number of text messages—to monitor the symptoms of people with mood disorders, and he’s running similar studies for people with amyotrophic lateral sclerosis and brain tumors. Early results show that the statistical learning methods he’s deployed are good at detecting behavioral patterns in the data that may serve as early indicators that a patient is running into trouble, whether it be a mental health episode or deteriorating motor functions. Taken to the extreme, this type of work could be described as using smartphones to capture an individual’s lived experience and replacing the empathetic eyes of a partner or friend with the statistical prowess of an algorithm.

The extent to which today’s computer scientists and mathematicians will be able to keep pace with the hype surrounding AI at the moment is unclear. What’s crystal clear, however, is that machine learning and deep learning are being stitched into our health systems for the long run. AI is here to stay. But the path forward requires substantial trust in science. It also requires extraordinary evidence that the technology works.

“The history of machine learning has demonstrated that things that are easy for people can be really hard for machines and vice versa,” Onnela says. “And I think that the biggest challenge going into the future is figuring out how we can train machines to see these things that we can pick up on intuitively.”