Opinion

It’s time to free public health from health care

In March 2021, I helped organize a mass COVID vaccination day on the Tule River Indian Reservation in Central California. The tribe’s concert venue was the only building with enough indoor space, but where would our equipment go? How would patients flow through the interior? And what information would convince the community to come?

The CDC website had detailed information on our clinical concerns: the temperature at which to store the vials, the types of syringes needed for jabs, and the paperwork to give patients. But I could not find solutions to our nonmedical questions. Fortunately, we had a staff member with emergency management experience design the event layout, but our signage and publicity campaign lagged, resulting in far fewer attendees than expected.

This clinical, biomedical bent of public health practice was visible in other ways during the COVID response: Risk levels nationally were based on hospital bed capacity, and agency recommendations focused on helping individuals avoid getting sick, rather than people’s needs to work and go to school—a problem public health officials acknowledged earlier this year. This bias is not unique to the pandemic. Medicine has dominated our public health system since its inception, constraining our health outcomes and marginalizing the social and communal aspects of health.

As policymakers debate about funding public health capacity, emphasizing prevention, and building a team-based approach, we can learn from three key moments in history—all of which show us why de-medicalizing would actually improve public health practice.

Sign up for Harvard Public Health

Delivered to your inbox weekly.

A medical school bias dominates public health education

The medicalization of public health starts with our political leaders. Laws in 21 states and the District of Columbia require the lead state health officer to have a medical degree, aligning with a 1959 position statement of the American Public Health Association. Training and education from schools of public health should be required instead, but public health education became entwined with medicine with assistance from two influential men: William Welch, the first dean of the Johns Hopkins School of Medicine, and Abraham Flexner, a famed medical education reformer who had backing from the Rockefeller Foundation to fund the first school of public health.

Both men viewed public health largely through a medical lens, focused on the prevention and management of disease. But some of their contemporaries disagreed. Edwin Seligman, a professor of political science at Columbia University, proposed a school based on viewing public health as a social science with political economy at its core. Seligman’s vision was a precursor to social epidemiology and the social determinants of health, the nonmedical factors that determine so much of our health.

It should be no surprise, however, that a Flexner-led committee selected Johns Hopkins, with its biomedically focused curriculum, to fund as the first school of public health. For decades after, nearly half of Hopkins public health graduates were physicians, greatly impacting the early public health workforce. The choice reverberated to other schools, including Columbia, which centered biomedicine in their eventual school and marginalized education in the social and political factors for decades.

Congress rejects a national health program—twice

Practitioners in the early- and mid-twentieth century also debated how to reconcile individual medicine, which was seen as curative, with public health services, which were considered preventative. A key figure in this debate was Thomas Parran, the U.S. Surgeon General from 1936 to 1948. Parran was a confidant of President Franklin Roosevelt and helped get public health funding included in the final legislation for the New Deal. (His record as a public health champion is, however, marred by his approval of Public Health Service research that infected more than 1,300 Guatemalans with syphilis during his last two years as surgeon general.) But his proposal for health insurance splintered political support and failed. When Truman became president, Parran convinced him to back a congressional proposal for a comprehensive national health program. But Parran, Truman, and their congressional allies were outmaneuvered by the American Medical Association, which argued the bill gave too much control to the federal government.

Public health and health care had a tumultuous relationship throughout the next few decades. The two systems bifurcated—accentuated by medicine moving into large hospitals and the expansion of health insurance under Medicare and Medicaid bypassing public health departments. By 1988, national leaders were describing public health system as falling into “disarray.” It has never fully recovered.

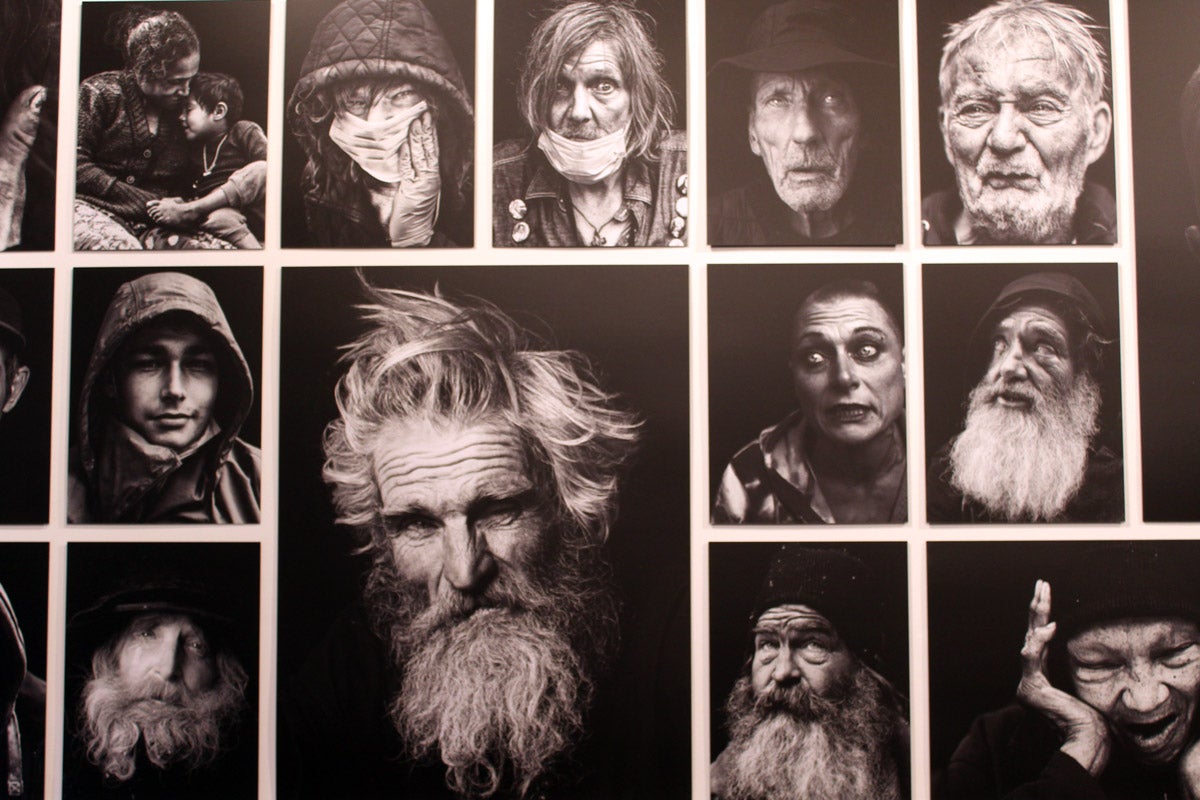

Jack Geiger, photographed in 2013 next to pictures of his former patients and associates in Mississippi, where he led an early initiative in what would now be understood as social determinants of health

Bebeto Matthews / AP Photo

Health centers innovate—but can’t fund—social services

Efforts to merge social services with health care resurfaced in the 1960s, when a federal agency focused on ending poverty created what we would now call federally qualified health centers (FQHCs). These were inspired by Jack Geiger, an American medical student who had worked at a health center in South Africa that also offered school meal programs, community vegetable gardens, health education, and more. During President Lyndon B. Johnson’s “War on Poverty” the government provided grants for two demonstration health centers that offered medical care, water supply protection in rural areas, a 600-acre vegetable farm, home repair, and even a bus system for patients who lacked transportation. The belief was that the grants would be slowly phased out as the centers relied on billing Medicare and Medicaid to support all their services. However, this billing never took off due to state-level restrictions that limited the spread of Medicaid. By Nixon’s second term, financial concerns forced the centers to focus on billable medical services and cut back on other social services.

Today, there are nearly 1,400 FQHCs, which are federally funded, and they deliver health care to more than 30 million people in underserved areas. But they—still—lack the holistic support that Geiger envisioned.

A new chance to learn from history

Today, Seligman, Geiger, and Parran’s ideas are being revived. At schools of public health, accreditation requirements are deemphasizing medical skills and focusing more on advocacy, leadership, and policymaking. The CDC, meanwhile, aims to create a nationwide technology infrastructure connecting public health agencies and health care providers. It will hopefully lay the groundwork for more coordination between federal, tribal, territorial, state and local public health agencies and health care organizations. And the Centers for Medicaid and Medicare Services are approving demonstration projects that use Medicaid funds to pay for social services in 20 states and growing.

But we need to do more to de-medicalize public health. We can start by changing laws and policies that give preference to physicians as public health leaders. For example, although I am a tribal public health officer in California, I am legally excluded from the California Conference of Local Health Officers because the state requires public health leaders to have an M.D., and I do not. Participating would help me build relationships with county and state colleagues as well as learn more about state plans for public health.

We should also recalibrate funding for public health research and services to focus on the social factors that largely determine our health. In but one glaring example of the neglect of these factors, the National Institutes of Health spent only around nine percent of its 2022 budget to study them. And we should expand federal funding for general public health services so that staff like me can decide where our community needs it most, rather than earmark it for prevention of specific diseases.

It took decades to get the public health system we have today. Because of its failures during COVID, we have an opportunity to reform. We can learn from the past to expand the vision and practice of public health beyond medicine. Health is a team sport, and neither health care nor public health can work without the other.

Source icons: efuroku / iStock, Ognjen18 / iStock

Republish this article

<p>For too long, a bias toward medicine has limited public health's potential.</p>

<p>Written by Eric Coles</p>

<p>This <a rel="canonical" href="https://harvardpublichealth.org/policy-practice/public-health-history-has-many-mistakes-we-can-learn-from-them/">article</a> originally appeared in<a href="https://harvardpublichealth.org/">Harvard Public Health magazine</a>. Subscribe to their <a href="https://harvardpublichealth.org/subscribe/">newsletter</a>.</p>

<p class="has-drop-cap">In March 2021, I helped organize a mass COVID vaccination day on the Tule River Indian Reservation in Central California. The tribe’s concert venue was the only building with enough indoor space, but where would our equipment go? How would patients flow through the interior? And what information would convince the community to come?</p>

<p>The CDC website had detailed information on our clinical concerns: the temperature at which to store the vials, the types of syringes needed for jabs, and the paperwork to give patients. But I could not find solutions to our nonmedical questions. Fortunately, we had a staff member with emergency management experience design the event layout, but our signage and publicity campaign lagged, resulting in far fewer attendees than expected.</p>

<p>This clinical, biomedical bent of public health practice was visible in other ways during the <a href="https://onlinelibrary.wiley.com/doi/pdfdirect/10.1111/1468-0009.12619" target="_blank" rel="noreferrer noopener">COVID response</a>: Risk levels nationally were based on hospital bed capacity, and agency recommendations focused on helping individuals avoid getting sick, rather than people’s needs to work and go to school—a problem public health officials <a href="https://www.wsj.com/articles/francis-collins-covid-lockdowns-braver-angels-anthony-fauci-great-barrington-declaration-f08a4fcf" target="_blank" rel="noreferrer noopener">acknowledged</a> earlier this year. This bias is not unique to the pandemic. Medicine has dominated our public health system since its inception, constraining our <a href="https://nam.edu/addressing-social-determinants-of-health-and-health-disparities-a-vital-direction-for-health-and-health-care/" target="_blank" rel="noreferrer noopener">health outcomes</a> and marginalizing the social and communal aspects of health. </p>

<p>As policymakers debate about <a href="https://www.whitehouse.gov/wp-content/uploads/2023/03/budget_fy2024.pdf" target="_blank" rel="noreferrer noopener">funding public health capacity</a>, <a href="https://www.healthaffairs.org/content/forefront/path-prevention-charting-course-healthier-nation" target="_blank" rel="noreferrer noopener">emphasizing prevention</a>, and <a href="https://www.nejm.org/doi/full/10.1056/NEJMp2403274" target="_blank" rel="noreferrer noopener">building a team-based approach</a>, we can learn from three key moments in history—all of which show us why de-medicalizing would actually improve public health practice.</p>

<h2 class="wp-block-heading" id="h-a-medical-school-bias-dominates-public-health-education">A medical school bias dominates public health education</h2>

<p>The medicalization of public health starts with our political leaders. <a href="https://journals.lww.com/jphmp/abstract/2024/03000/laws_governing_state_health_official_appointments_.17.aspx" target="_blank" rel="noreferrer noopener">Laws</a> in 21 states and the District of Columbia require the lead state health officer to have a medical degree, aligning with a <a href="https://www.apha.org/Policies-and-Advocacy/Public-Health-Policy-Statements/Policy-Database/2014/07/23/14/53/Qualifications-for-State-Health-Officers" target="_blank" rel="noreferrer noopener">1959</a> position statement of the American Public Health Association. Training and education from schools of public health should be required instead, but public health education became entwined with medicine with assistance from two influential men: William Welch, the first dean of the Johns Hopkins School of Medicine, and Abraham Flexner, a famed medical education reformer who had backing from the Rockefeller Foundation to fund the first school of public health.</p>

<p>Both men viewed public health largely through a medical lens, focused on the prevention and management of disease. But some of their contemporaries disagreed. Edwin Seligman, a professor of political science at Columbia University, proposed a school based on viewing public health as a social science with political economy at its core. Seligman’s vision was a precursor to <a href="https://harvardpublichealth.org/policy-practice/new-sdoh-ideas-from-nancy-krieger-social-epidemiologist/">social epidemiology</a> and the social determinants of health, the nonmedical factors that determine so much of our health. </p>

<p>It should be no surprise, however, that a Flexner-led committee selected Johns Hopkins, with its biomedically focused curriculum, to fund as the first school of public health. For decades after, nearly half of Hopkins public health graduates were physicians, greatly impacting the early public health workforce. The choice reverberated to other schools, including Columbia, which centered biomedicine in their eventual school and marginalized education in the social and political factors for decades.</p>

<h2 class="wp-block-heading" id="h-congress-rejects-a-national-health-program-twice">Congress rejects a national health program—twice</h2>

<p>Practitioners in the early- and mid-twentieth century also debated how to reconcile individual medicine, which was seen as curative, with public health services, which were considered preventative. A key figure in this debate was Thomas Parran, the U.S. Surgeon General from 1936 to 1948. Parran was a confidant of President Franklin Roosevelt and helped get public health funding included in the final legislation for the New Deal. (His record as a public health champion is, however, <a href="http://www.astda.org/uploads/pdfs/Parran/olq201509.pdf">marred by his approval</a> of Public Health Service research that infected more than 1,300 Guatemalans with syphilis during his last two years as surgeon general.) But his proposal for health insurance splintered political support and failed. When Truman became president, Parran convinced him to back a congressional proposal for a <a href="https://ajph.aphapublications.org/doi/abs/10.2105/AJPH.2016.303639">comprehensive national health program</a>. But Parran, Truman, and their congressional allies were outmaneuvered by the American Medical Association, which argued the bill gave too <a href="https://www.trumanlibrary.gov/education/presidential-inquiries/challenge-national-healthcare">much control</a> to the federal government. </p>

<p>Public health and health care had a <a href="https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1446218/pdf/10800418.pdf">tumultuous relationship</a> throughout the next few decades. The two systems bifurcated—accentuated by medicine moving into large hospitals and the expansion of health insurance under Medicare and Medicaid <a href="https://www.milbank.org/wp-content/uploads/mq/volume-55/issue-02/55-2-Reorganizations-of-Health-Agencies-by-Local-Government-in-American-Urban-Centers-What-Do-They-Portend-for-Public-Health.pdf" target="_blank" rel="noreferrer noopener">bypassing</a> public health departments. By 1988, national leaders were describing public health system as falling into "<a href="https://www.ncbi.nlm.nih.gov/books/NBK218218/">disarray</a>." It has never fully recovered.</p>

<h2 class="wp-block-heading" id="h-health-centers-innovate-but-can-t-fund-social-services">Health centers innovate—but can’t fund—social services</h2>

<p>Efforts to merge social services with health care resurfaced in the 1960s, when a federal agency focused on ending poverty created what we would now call <a href="https://www.nachc.org/resource/americas-health-centers-by-the-numbers/" target="_blank" rel="noreferrer noopener">federally qualified health centers</a> (FQHCs). These were inspired by <a href="https://journals.lww.com/ambulatorycaremanagement/fulltext/2005/10000/the_first_community_health_centers__a_model_of.6.aspx?casa_token=R3ObFCeRqNwAAAAA:ztCRLeD1qqelrUk-W4_6d5hbMUV6gQ64FwdRSgH96cHpeXZuQPdTy4ARMtOqjzTEWblsO1ri4dnJvri_hvP6gw" target="_blank" rel="noreferrer noopener">Jack Geiger</a>, an American medical student who had worked at a health center in South Africa that also offered <a href="https://journals.co.za/doi/pdf/10.10520/AJA20785135_26730" target="_blank" rel="noreferrer noopener">school meal programs</a>, community vegetable gardens, health education, and more. During President Lyndon B. Johnson’s “War on Poverty” the government provided grants for two demonstration health centers that offered medical care, water supply protection in rural areas, a 600-acre vegetable farm, home repair, and even a bus system for patients who lacked transportation. The belief was that the grants would be slowly phased out as the centers relied on billing Medicare and Medicaid to support all their services. However, this billing never took off due to state-level restrictions that limited the spread of Medicaid. By Nixon’s second term, financial concerns forced the centers to focus on <a href="https://www.jstor.org/stable/3349428?seq=21" target="_blank" rel="noreferrer noopener">billable medical services</a> and cut back on other social services. </p>

<p>Today, there are nearly 1,400 FQHCs, which <a href="https://www.nachc.org/resource/federal-health-center-funding-101/" target="_blank" rel="noreferrer noopener">are federally funded</a>, and they deliver health care to more than 30 million people in underserved areas. But they—still—lack the holistic support that Geiger envisioned.</p>

<h2 class="wp-block-heading" id="h-a-new-chance-to-learn-from-history">A new chance to learn from history</h2>

<p>Today, Seligman, Geiger, and Parran’s ideas are being revived. At schools of public health, <a href="https://ceph.org/about/org-info/criteria-procedures-documents/criteria-procedures/" target="_blank" rel="noreferrer noopener">accreditation requirements</a> are deemphasizing medical skills and focusing more on <a href="https://harvardpublichealth.org/equity/a-qa-with-the-meharry-medical-college-global-health-dean/">advocacy, leadership, and policymaking</a>. The CDC, meanwhile, aims to create a nationwide <a href="https://www.cdc.gov/surveillance/data-modernization/index.html" target="_blank" rel="noreferrer noopener">technology infrastructure</a> connecting public health agencies and health care providers. It will <a href="https://harvardpublichealth.org/policy-practice/what-can-mandy-cohen-do-to-regain-the-publics-trust-in-the-cdc/" target="_blank" rel="noreferrer noopener">hopefully</a> lay the groundwork for more coordination between federal, tribal, territorial, state and local public health agencies and health care organizations. And the Centers for Medicaid and Medicare Services are approving demonstration projects that use Medicaid funds to pay for social services in <a href="https://www.kff.org/medicaid/issue-brief/medicaid-waiver-tracker-approved-and-pending-section-1115-waivers-by-state/" target="_blank" rel="noreferrer noopener">20 states and growing</a>.</p>

<p>But we need to do more to de-medicalize public health. We can start by changing laws and policies that give preference to physicians as public health leaders. For example, although I am a tribal public health officer in California, I am legally excluded from the California Conference of Local Health Officers because the state requires public health leaders to have an M.D., and I do not. Participating would help me build relationships with county and state colleagues as well as learn more about state plans for public health.</p>

<p>We should also recalibrate funding for public health research and services to focus on the social factors that largely determine our health. In but one glaring example of the neglect of these factors, the National Institutes of Health spent only around <a href="https://report.nih.gov/funding/categorical-spending#/" target="_blank" rel="noreferrer noopener">nine percent</a> of its 2022 budget to study them. And we should expand federal funding for general public health services so that staff like me can decide where our community needs it most, rather than earmark it for prevention of specific diseases.</p>

<p class=" t-has-endmark t-has-endmark">It took decades to get the public health system we have today. Because of its failures during COVID, we have an opportunity to reform. We can learn from the past to expand the vision and practice of public health beyond medicine. Health is a <a href="https://www.nejm.org/doi/full/10.1056/NEJMp2403274" target="_blank" rel="noreferrer noopener">team sport</a>, and neither health care nor public health can work without the other.</p>

<script async src="https://www.googletagmanager.com/gtag/js?id=G-S1L5BS4DJN"></script>

<script>

window.dataLayer = window.dataLayer || [];

if (typeof gtag !== "function") {function gtag(){dataLayer.push(arguments);}}

gtag('js', new Date());

gtag('config', 'G-S1L5BS4DJN');

</script>

Republishing guidelines

We’re happy to know you’re interested in republishing one of our stories. Please follow the guidelines below, adapted from other sites, primarily ProPublica’s Steal Our Stories guidelines (we didn’t steal all of its republishing guidelines, but we stole a lot of them). We also borrowed from Undark and KFF Health News.

Timeframe: Most stories and opinion pieces on our site can be republished within 90 days of posting. An article is available for republishing if our “Republish” button appears next to the story. We follow the Creative Commons noncommercial no-derivatives license.

When republishing a Harvard Public Health story, please follow these rules and use the required acknowledgments:

- Do not edit our stories, except to reflect changes in time (for instance, “last week” may replace “yesterday”), make style updates (we use serial commas; you may choose not to), and location (we spell out state names; you may choose not to).

- Include the author’s byline.

- Include text at the top of the story that says, “This article was originally published by Harvard Public Health. You must link the words “Harvard Public Health” to the story’s original/canonical URL.

- You must preserve the links in our stories, including our newsletter sign-up language and link.

- You must use our analytics tag: a single pixel and a snippet of HTML code that allows us to monitor our story’s traffic on your site. If you utilize our “Republish” link, the code will be automatically appended at the end of the article. It occupies minimal space and will be enclosed within a standard <script> tag.

- You must set the canonical link to the original Harvard Public Health URL or otherwise ensure that canonical tags are properly implemented to indicate that HPH is the original source of the content. For more information about canonical metadata, click here.

Packaging: Feel free to use our headline and deck or to craft your own headlines, subheads, and other material.

Art: You may republish editorial cartoons and photographs on stories with the “Republish” button. For illustrations or articles without the “Republish” button, please reach out to republishing@hsph.harvard.edu.

Exceptions: Stories that do not include a Republish button are either exclusive to us or governed by another collaborative agreement. Please reach out directly to the author, photographer, illustrator, or other named contributor for permission to reprint work that does not include our Republish button. Please do the same for stories published more than 90 days previously. If you have any questions, contact us at republishing@hsph.harvard.edu.

Translations: If you would like to translate our story into another language, please contact us first at republishing@hsph.harvard.edu.

Ads: It’s okay to put our stories on pages with ads, but not ads specifically sold against our stories. You can’t state or imply that donations to your organization support Harvard Public Health.

Responsibilities and restrictions: You have no rights to sell, license, syndicate, or otherwise represent yourself as the authorized owner of our material to any third parties. This means that you cannot actively publish or submit our work for syndication to third-party platforms or apps like Apple News or Google News. Harvard Public Health recognizes that publishers cannot fully control when certain third parties aggregate or crawl content from publishers’ own sites.

You may not republish our material wholesale or automatically; you need to select stories to be republished individually.

You may not use our work to populate a website designed to improve rankings on search engines or solely to gain revenue from network-based advertisements.

Any website on which our stories appear must include a prominent and effective way to contact the editorial team at the publication.

Social media: If your publication shares republished stories on social media, we welcome a tag. We are @PublicHealthMag on X, Threads, and Instagram, and Harvard Public Health magazine on Facebook and LinkedIn.

Questions: If you have other questions, email us at republishing@hsph.harvard.edu.